It all started with using AI Image Generation capabilities to (re)generate from minimal data. The recording of metaverse experiences supports various use cases in collaboration, VR training, and more. Such Metaverse Recordings can be created as multimedia and time series data during the 3D rendering process of the audio–video stream for the user. To search in a collection of recordings, Multimedia Information Retrieval methods can be used. Also, querying and accessing Metaverse Recordings based on the recorded time series data is possible. The presentation of human-perceivable results of time-series-based Metaverse Recordings is a challenge. This paper demonstrates an approach to generating human-perceivable media from time-series-based Metaverse Recordings with the help of generative artificial intelligence. Our findings show the general feasibility of the approach and outline the current limitations and remaining challenges. Read the full paper

Leave a CommentCategory: Metaverse

Integration of Metaverse and Multimedia Information Retrieval

Diving into the vibrant intersection of the Metaverse and Multimedia Information Retrieval (MMIR), we uncover a fascinating journey that’s shaping the future of Metaverse integration with MMIR. Imagine stepping into a universe where the boundaries between physical and digital realities blur, creating an immersive world teeming with multimedia content. This is the Metaverse, a collective virtual space, built on the pillars of augmented and virtual reality technologies.

At the heart of integrating these worlds lies the challenge of efficiently indexing, retrieving, and making sense of a deluge of multimedia content—ranging from images, videos, to 3D models and beyond. Enter the realm of Multimedia Information Retrieval (MMIR), a sophisticated field dedicated to the art and science of finding and organizing multimedia data.

The research explored here, as my Ph.D. project, ventures into this nascent domain, proposing innovative frameworks for bridging the Metaverse with MMIR. Their work unveils two primary narratives: one, how we can leverage MMIR to navigate the vast expanses of the Metaverse, and two, how the Metaverse itself can generate new forms of multimedia for MMIR to organize and retrieve.

In the first scenario, imagine you’re an educator in the Metaverse, looking to build an interactive, virtual classroom. Through the integration of MMIR, you can seamlessly pull educational content—be it historical artifacts in 3D, immersive documentaries, or interactive simulations—right into your virtual space, enriching the learning experience like never before.

The second scenario flips the perspective, showcasing the Metaverse as a prolific generator of multimedia content. From virtual tours and events to user-generated content and beyond, every action and interaction within the Metaverse creates data ripe for MMIR’s picking. This opens up a new frontier for content creators and researchers alike, offering fresh avenues for creativity, analytics, and even virtual heritage preservation.

Navigating these possibilities, the research present sophisticated models and architectures, such as the Generic MMIR Integration Architecture for Metaverse Playout (GMIA4MP) and the Process Framework for Metaverse Recordings (PFMR). These frameworks lay the groundwork for seamless interaction between the Metaverse and MMIR systems, ensuring content is not only accessible but meaningful and contextual.

To bring these concepts to life, let’s visualize a diagram illustrating the flow from multimedia creation in the Metaverse, through its processing by MMIR systems, to its ultimate retrieval and utilization by end-users. This visualization underscores the cyclical nature of creation and discovery in this integrated ecosystem.

In essence, this research lights the path toward a future where the Metaverse and MMIR coalesce, creating a symbiotic relationship that enhances how we create, discover, and interact with multimedia content. It’s a journey not just of technological innovation, but of reimagining the very fabric of our digital experiences.

Let’s create an image to encapsulate this vibrant future: Picture a vast, sprawling virtual landscape, brimming with diverse multimedia content—3D models, videos, images, and interactive elements. Within this digital realm, avatars of researchers, educators, and creators move and interact, bringing to life a dynamic ecosystem where the exchange of multimedia content is fluid, intuitive, and boundlessly creative. This visualization, rooted in the essence of the research, will capture the imagination, inviting readers to envision the endless possibilities at the intersection of the Metaverse and MMIR.

Leave a Comment256 Metaverse Records Dataset

I’m thrilled to announce the availability of the 256-MetaverseRecords Dataset, a dataset for experiments with machine learning technology for metaverse recordings. This dataset represents a significant step forward in the exploration of the integration of virtual worlds in Multimedia Information Retrieval.

1 CommentThe dataset was created to explore the use of meatverse virtual worlds and evlauate performance of feature exraction methods on Metaverse Recordings. The dataset contains 256 video records of user sessions in virtual worlds, mostly based on screen recordings.

Early in October, I contributed to the IEEE MetroXRAINE 2023 in Milano Italy. With my work, I presented the approach for my PhD research. The paper is titled “Towards the Integration of Metaverse and Multimedia Information Retrieval”. In a nutshell, integrating the metaverse with Multimedia Information Retrieval (MMIR) can be grouped into at least two cases: metaverse used MMIR and MMIR processes metaverse produced multimedia. However, my research concentrates on the integration of metaverse-produced content in MMIR. But more on this in another post. In the submission, I sent a graphical abstract, and hey, it was awarded!

Early in October, I contributed to the IEEE MetroXRAINE 2023 in Milano Italy. With my work, I presented the approach for my PhD research. The paper is titled “Towards the Integration of Metaverse and Multimedia Information Retrieval”. In a nutshell, integrating the metaverse with Multimedia Information Retrieval (MMIR) can be grouped into at least two cases: metaverse used MMIR and MMIR processes metaverse produced multimedia. However, my research concentrates on the integration of metaverse-produced content in MMIR. But more on this in another post. In the submission, I sent a graphical abstract, and hey, it was awarded!

IEEE MetroXRAINE is an interdisciplinary conference on the fields of metrology, Extended Reality, Artificial Intelligence, and Neural Engineering. I tried an EEG-based brain interface for a game. It is an awesome experience, and I’m excited to see more of this technology in the future. But for sure, I need to concentrate more on my research!

Big Data Week & AWS User Group Bonn

In the past weeks, I experienced the speaker’s life. I was visiting the IBC in Amsterdam for a few days, presenting the company I’m working for. Early in October, I presented a talk about AI use cases in the Media Landscape at the Big Data Week in Bucharest, Romania. And last week, I gave a similar talk at home, for the AWS User Group in Bonn.

While talking about your thoughts, models and experiences is cool, the bigger stages still make me nervous—however, practice and experience (aka training) help a lot.

AI is a hot topic, not only generative AI, but more and more of the business in media and elsewhere is driven by data. Customer experiences are customized by AI, manual effort vanishes through AI, and even content is created by AI. In Multimedia research, we say “Multimedia is everywhere”, but I think we can state “AI is everywhere”.

Metaverse 2023: Expectation vs. Reality

The concept of a metaverse, or a virtual world that is fully immersive and seamlessly integrates with the real world, has long captured the imagination of science fiction writers and technology enthusiasts. In recent years, there has been a surge in the development of virtual reality and augmented reality technologies, leading many to believe that we are on the cusp of realizing the metaverse.

In 2022, it was expected that the metaverse would be a fully realized and integrated part of our daily lives. People would be able to work, socialize, and even go on vacations entirely within virtual worlds. However, as we now know, the reality of the metaverse in 2022 has not quite lived up to these expectations.

In 2022, it was expected that the metaverse would be a fully realized and integrated part of our daily lives. People would be able to work, socialize, and even go on vacations entirely within virtual worlds. However, as we now know, the reality of the metaverse in 2022 has not quite lived up to these expectations.

Is Metaverse multimedia?

The metaverse has been a hot topic in the past year. Besides the hype and the question, of whether it will be the next big thing or just another failed technology (such as 3D TV), it could be asked if the metaverse is multimedia. The following is a formal verification of this question. First, a description of the metaverse is needed, followed by a definition of multimedia and finally, the verification of the metaverse is multimedia.

What is the Metaverse

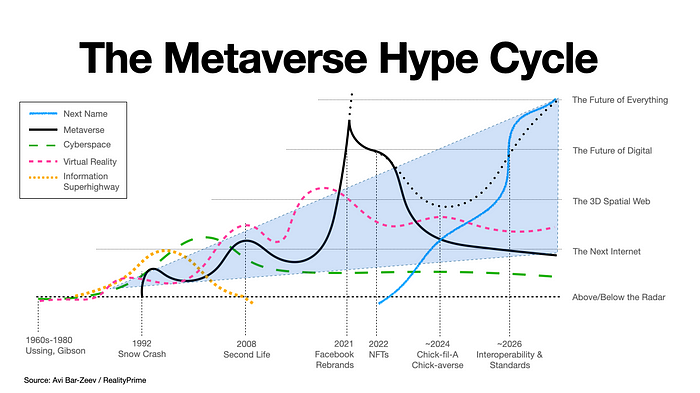

It isn’t easy to describe the metaverse precisely, because it is under heavy development. The term was coined in Neal Stephenson’s novel Snow Crash. Over time, it went through some iterations until the current climax, decided to rebrand to meta and go all in into the metaverse. Avi Bar-Zeev visualized the different stages of the metaverse perfectly.

As the metaverse is under development, the width of the definition is broader. Mystakidis defined the metaverse as follows:

Leave a Comment“The Metaverse is the post-reality universe, a perpetual and persistent multiuser environment merging physical reality with digital virtuality. It is based on the convergence of technologies that enable multisensory interactions with virtual environments, digital objects and people such as virtual reality (VR) and augmented reality (AR). Hence, the Metaverse is an interconnected web of social, networked immersive environments in persistent multiuser platforms. It enables seamless embodied user communication in real-time and dynamic interactions with digital artifacts. Its first iteration was a web of virtual worlds where avatars were able to teleport among them. The contemporary iteration of the Metaverse features social, immersive VR platforms compatible with massive multiplayer online video games, open game worlds and AR collaborative spaces.” [1]

A primer on the Metaverse

Among the trending things in technology is the Metaverse. It lacks a precise definition, but in short, it is a perpetual digital multiuser space, involving virtual and augmented reality. VR and AR technologies have been developing slowly in the last 10 years, so why is Metaverse trending? A boost was given by Mark Zuckerberg and his decision to go all in with the metaverse. The rebranding of the company from Facebook to Meta is a bold step, echoing in the whole internet industry. Some people see it as not less than the next generation of the internet (Reference). So the metaverse is trending, and it is neither a simple thing nor a new thing. So this article peeks into this topic with a lot of its dimensions.